Demo: 95% accuracy. Production: 27% accuracy. The culprit? Your AI models are fluent in vendor demos but can't speak the dozens of data dialects flooding your actual security environment.

By

Yichen Jin

Co-Founder & CEO, Fleak

The Data Format Problem Killing Your Security AI Performance

Your SIEM's AI features and behavioral analytics platforms worked perfectly in demos. But in production, detection accuracy remains frustratingly low. SANS research shows 61% of security teams struggle with workforce limitations, largely because analysts spend most of their time on data normalization rather than threat analysis. The core problem isn't your AI agents or fancy models—it's that every security tool formats the same events differently.

Active Directory logs authentication as "EventID 4624," Okta uses "actor.displayName" in JSON, AWS shows "userIdentity.userName," and Azure AD presents "identity.user." Your AI models excel at pattern recognition but struggle when forced to interpret dozens of data formats for identical events.

The Real AI Readiness Problem

The concept of "AI-ready data" is often misunderstood. Most security data, in its raw form, is far from it. Compare what current systems provide versus what AI agents actually need:

Current Reality (Vendor-Specific Formats) | What AI Agents Actually Need (Standardized) |

Okta:

|

|

AWS:

| The Critical Difference: The first scenario demands separate training for each vendor's data, creating isolated, inefficient AI models. The second, however, enables a single model to comprehend authentication across your entire environment, regardless of the source. This is what AI-ready data looks like. |

Azure:

|

Why Data Inconsistency Cripples AI Detection

Security teams invest millions in AI detection platforms, attracted by plug-and-play solutions that work well in demos. But integration reveals the challenges, often consuming six months just to connect the first ten log sources. The AI models, trained on pristine demo data, falter when faced with the chaos of real-world enterprise environments, leading to poor performance. Consequently, security teams find themselves spending 80% of their time on data plumbing and a mere 10% on actual threat hunting.

The fundamental issue is simple: AI models excel at pattern recognition but are terrible at handling data schema variations. It's challenging enough to teach a model to detect lateral movement without also requiring it to learn that Windows calls it "Logon Type 3" while Linux refers to it as "ssh connection established." This data inconsistency creates the main bottleneck.

But there's a hidden bottleneck that most vendors don't mention: centralized AI processing creates a second performance wall. Even after you solve the data format problem, sending millions of normalized events to a central AI service introduces significant latency and escalating costs. This is why many "AI-ready" solutions still struggle with real-time detection at enterprise scale.

At Fleak, we deploy parsers all the way to the edge. Logs are able to be parsed locally where they are generated, and only normalized, AI-ready data is sent downstream. This reduces bandwidth by 60-80%(structured events are far lighter than raw logs) and enables real-time local AI processing.

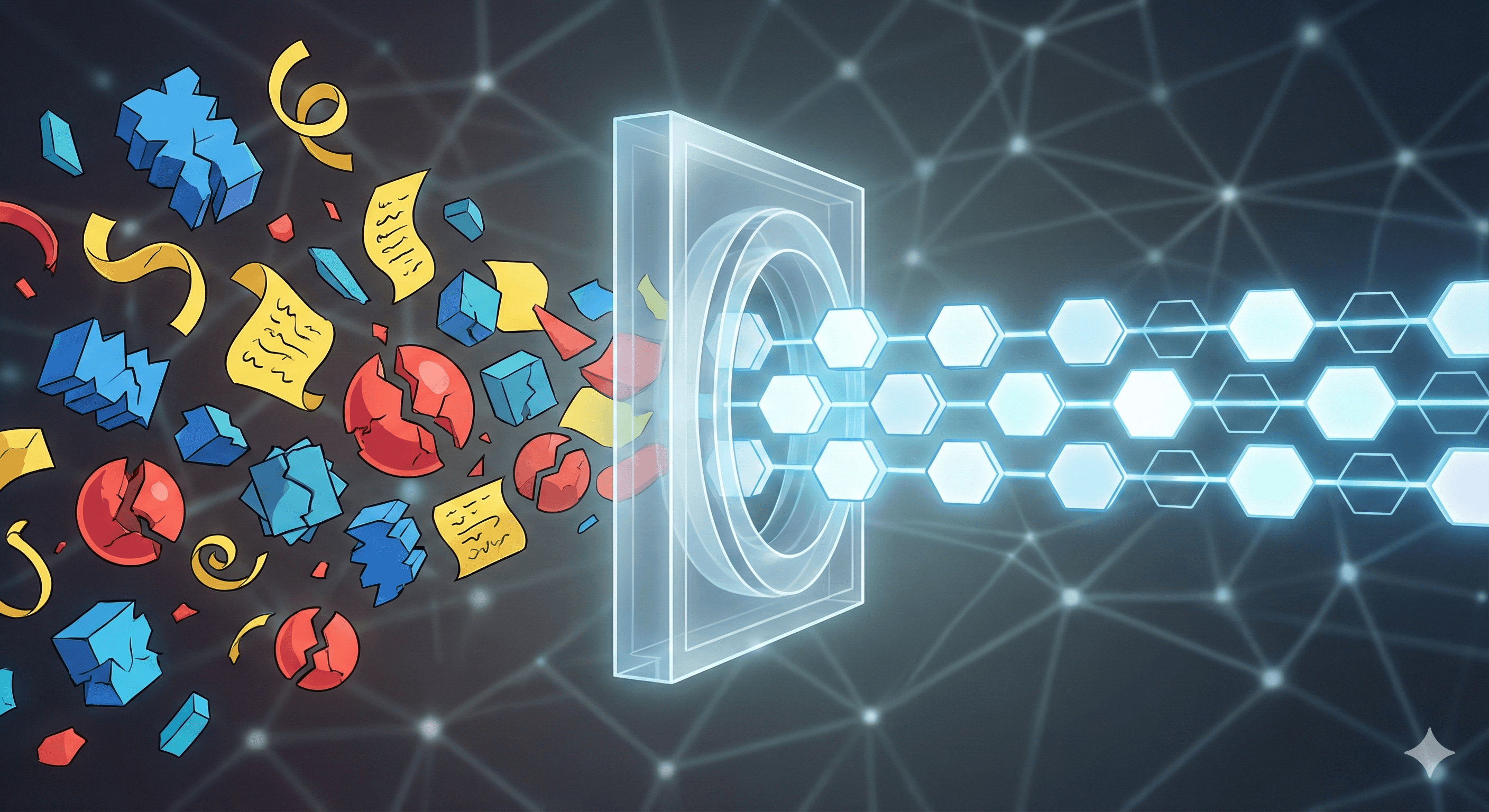

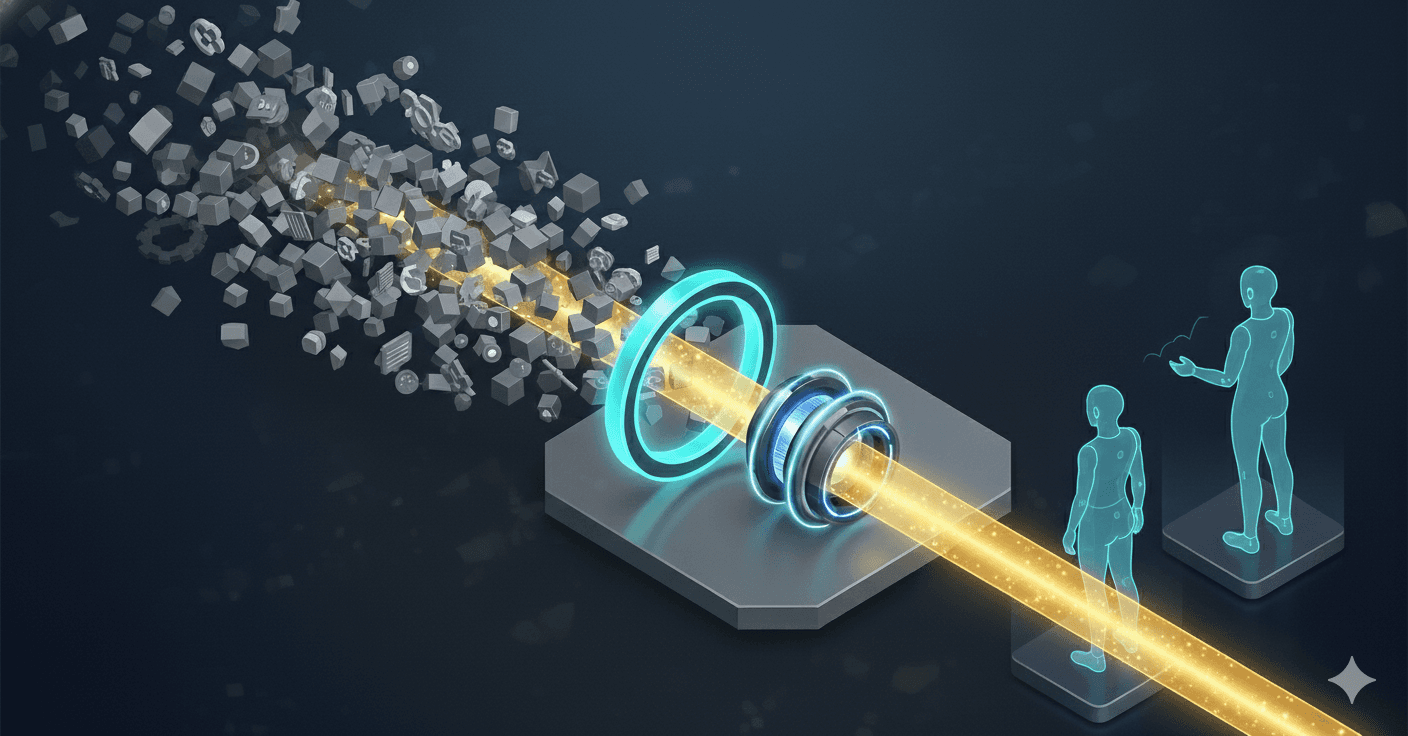

The Universal Data Gateway: A Unified Approach

At Fleak, we built a solution to this common problem: the Universal Semantic Layer as a data gateway. The concept is straightforward: instead of building hundreds of AI agents for an equal number of disparate data sources, we construct one intelligent semantic translation layer. This layer's sole purpose is to make all security data speak the same, unified language.

Here's how we implemented it:

Component | Functionality | Key Benefits |

Ingestion Layer | Handles any input format (JSON, CEF, Syslog, custom). Real-time streaming with <100ms latency. No data storage required (pure transformation). | Versatile Input: Accepts all data types. High Performance: Near real-time processing. Lightweight: No storage footprint. |

AI Translation Engine | Uses LLMs to understand log semantics, not just syntax. Learns from each new data source automatically. Generates OCSF-compliant output (industry standard). | Semantic Understanding: Goes beyond syntax. Automated Learning: Adapts to new sources. Standardized Output: OCSF compliance. |

Validation & Learning Loop | Continuously refines accuracy with feedback. Handles schema changes without breaking downstream systems. Currently achieving 90%+ accuracy on required fields. | Continuous Improvement: Self-correcting. Resilient: Adapts to schema changes. High Accuracy: Reliable data mapping. |

Here's a real example from our production environment showing the transformation:

Raw Input (Cisco ASA Log):

%ASA-6-302013: Built outbound TCP connection 1234 for outside:192.168.1.100/80 (192.168.1.100/80) to inside:10.0.0.5/54321 (10.0.0.5/54321)

Transformed Output (OCSF Network Activity):

JSON

{

"category_name": "Network Activity",

"class_name": "Network Connection",

"time": "2024-01-15T10:30:00Z",

"src_endpoint": {

"ip": "10.0.0.5",

"port": 54321,

"location": "inside"

},

"dst_endpoint": {

"ip": "192.168.1.100",

"port": 80,

"location": "outside"

},

"connection_info": {

"protocol_name": "TCP",

"direction": "outbound"

}

}

Now, any AI agent can understand this connection event without needing to decipher Cisco's specific log format.

The Transformative Performance Impact

The Universal Data Gateway significantly improves performance, as shown in these metrics:

Metric | Before Universal Semantic Layer | After Universal Data Gateway |

Log Source Integration Time | 6 months (average) | 15 minutes |

Auth Models Needed | 1 model per system | 1 unified model (across all systems) |

Engineer Time on Data | 80% (data integration) | 80% (actual threat hunting) |

Our system handles over 20,000 events per second with under 100ms latency, achieving 90% accuracy on required OCSF fields. It works with any LLM.

Why OCSF is Essential for Modern SOC

OCSF (Open Cybersecurity Schema Framework) creates a unified view across enterprise environments:

Consistent field names across all event types.

Standardized timestamps and identifiers for accurate correlation.

Hierarchical categorization (category → class → activity) for structured understanding.

Rich metadata that provides crucial context for AI analysis.

This means instead of training separate models for:

Windows authentication (EventID 4624)

Linux authentication (auth.log patterns)

Cloud authentication (API calls)

VPN authentication (network device logs)

You train one model on OCSF authentication events, and it works universally.

What True AI-Ready Data Unleashes

AI-ready security data enables these capabilities across security operations:

Capability | How AI-Ready Data Transforms It |

Build AI Agents That Actually Work |

|

Deploy AI at Scale |

|

Future-Proof Your Security Stack |

|

The Technical Reality

Building this system was complex. We solved several key challenges:

Handling Vendor Schema Changes: Log formats are notoriously prone to unannounced changes. Our AI-driven system automatically adapts, with robust fallback mechanisms to prevent data loss.

Maintaining Processing Speed: Real-time requirements demand no batch processing. We achieved this with a streaming architecture built on Apache Kafka, allowing for horizontal scaling to meet enterprise volumes.

Ensuring Accuracy: Continuous learning from human feedback, rigorous validation against known good mappings, and confidence scoring for quality control are all integral to maintaining high accuracy.

The Bottom Line

The future of security AI is not only about smarter models. It is about feeding existing models consistent, standardized data. A universal data gateway with semantic understanding transforms security data from a frustrating liability into a powerful asset.

Instead of building 200 different AI agents for 200 data sources, you build one AI agent that understands all your data. That is the difference between demo AI and production AI. Organizations that implement this first will have AI systems that detect real threats instead of just processing alerts.

Technical implementation details and open-source components are available at https://docs.fleak.ai/zephflow/tutorials/standardize_ciscoasa_to_ocsf. Currently in production with Fortune 500 enterprises processing 10M+ events per day.

Other Posts

Feb 19, 2026

Breaking the ‘Intelligence Ceiling’: How Gruve and Fleak Achieved Tier 3 Threat Detection Fidelity

Standard pipelines waste 40% on "logic grinding." The Gruve + Fleak architecture ends the janitor work. With Fleak’s Normalization Layer, raw logs from Zscaler and Okta become actionable intelligence in the Gruve Command Center.

Jan 19, 2026

The Observability Interpretation Layer: Decoupling Routing from Intelligence

Stop paying the '3 AM Tax.' When AWS ALB, Java, and Postgres dialects clash, Trace IDs vanish. Read the engineering blueprint for decoupling ingestion from compute and enforcing OpenTelemetry schemas in flight.

Jan 8, 2026

The First Mile Gap: Why Your Autonomous Systems Know Everything but Understand Nothing

90% of AI agent projects fail within 30 days. Transition from Systems of Record to Systems of Context. Ensure 2026 success by ending manual mapping for a self-healing foundation.