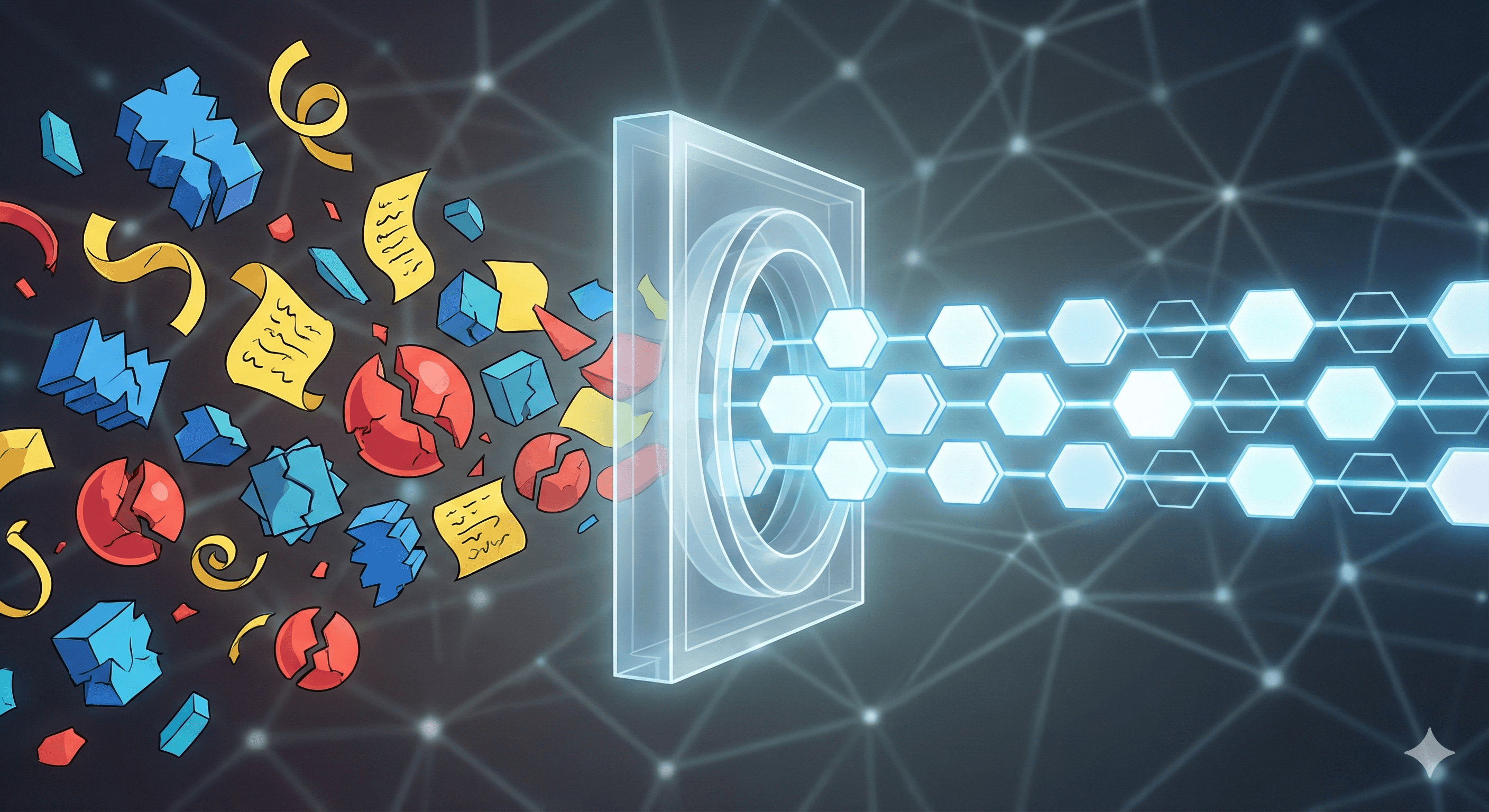

Security teams invest in AI but see no ROI because they're building on a broken data foundation. This isn't a security failure; it's a data engineering problem solved by prioritizing high-quality, normalized data over raw volume.

By

Bo Lei

Co-Founder & CTO, Fleak

During my years of building large scale data pipelines at places like Netflix and Splunk, I was working closely with security teams. I’ve noticed a familiar pattern. Security organizations invest heavily in sophisticated, AI-driven platforms, but the promised ROI never materializes. The reason feels deeply familiar: they're building on a broken data foundation.

A 2022 report found that 55% of security teams admit that critical alerts are being missed. This isn't a failing of the security specialists; it's a classic data engineering failure, one that the data world has been tackling for over a decade.

The Data Foundation: A Familiar Engineering Challenge

Security data platforms should prioritize signal quality over raw volume. This isn't a new idea—it's the same principle that governs everything from financial transaction monitoring to A/B testing infrastructure. High-quality data is clean, normalized, and enriched with business context.

1. The Normalization Trap. The debate over a common schema like OCSF reminds me of the endless "schema-on-read vs. schema-on-write" arguments in the data warehousing world. The goal is right, but the execution is often flawed. Teams chase a perfect, all-encompassing schema from day one, a monolithic approach that rarely ships.

A more pragmatic strategy is to enforce strict normalization on a small, high-impact set of core fields (timestamp, actor.user.name, src.ip, dest.ip, action.name) across your most critical data sources first. A consistent timestamp across your EDR and cloud logs is more valuable than a perfect, 200-field schema for just one of them. Deliver value incrementally.

2. Enrichment is Just a High-Stakes Join. The next step, enrichment, is a task any data engineer knows well: joining event streams with context tables. In security, this means fusing raw logs with business context. Is the src.ip a developer's ephemeral container or a production database? Is the actor.user.name a temp contractor or the CFO? This is no different from joining a user activity stream with a customer profile to personalize an experience. The most valuable data often isn't in a third-party threat feed; it's in your own CMDB and identity provider.

The Payoff: From Raw Events to Actionable Signals

Consider this common scenario: the "login from a new country" alert. This is the difference between a raw event and a stateful, processed feature.

The Raw Event: Login from new country: 'North Korea'

This is unactionable noise. It forces an analyst to manually perform the enrichment and correlation that the data pipeline should have already done.The Processed Signal: "CRITICAL: A Finance Controller's account, which has never touched a production system, just logged into a PCI-DSS tagged database server from an IP (37.x.x.x) in North Korea that was flagged by VirusTotal 10 minutes ago."

This is an incident, not an alert. The signal is clear because the pipeline did the heavy lifting. This is the level of quality required to build reliable automation (SOAR) that doesn't crumble under the weight of ambiguity.

A Pragmatic Roadmap

Security teams often feel overwhelmed by the scope of building a data platform. The key is to treat it like any other data product and break the work into phases to build momentum.

Define a Data Product, Not a Data Source. Don't start with "let's onboard firewall logs." Start with a use case: "We need a reliable data product for detecting ransomware-like lateral movement." This immediately defines your scope (e.g., EDR and Active Directory logs) and your customers (the detection engineering team).

Build a Minimum Viable Pipeline (MVP). Ingest only the necessary log sources. Normalize only the most critical fields. Add just two enrichment sources—one internal (user roles) and one external (threat intel). The goal is to get a small stream of high-quality data flowing and establish an initial SLA.

Measure Pipeline Quality, Not Just Alerts. The most compelling metric for leadership is efficiency. Show them the numbers: "Previously, this threat category generated 500 alerts/month, all false positives. Our new pipeline produced five, of which four were actionable." This is how you justify further investment.

The Inevitable Hurdles

Three challenges consistently emerge in these projects, and they are the same ones that plague enterprise data programs everywhere.

Missing Data Contracts. When a network upgrade changes a firewall log format without warning, the security pipeline breaks. This is an organizational problem that requires a technical solution: a "data contract" between the data producer (the network team) and the data consumer (the security team).

Unchecked Cloud Costs. Without a FinOps mindset, the costs for storing and querying terabytes of security data can spiral. This platform is a real-time production system and must be managed with the same cost discipline as any other.

The Maintenance Treadmill. Data pipelines are living systems. Source APIs change, formats break, and new enrichments are needed. Research shows up to 85% of big data and AI projects fail, often due to this underestimation of ongoing maintenance. This is an operational commitment.

Building a proper security data platform is a serious engineering effort. But it's the only way to unlock the true potential of AI in security. Staying trapped in alert fatigue is ultimately more costly. The problems are complex, but the solutions are rooted in a data engineering discipline that has been honed for years.

Other Posts

Feb 19, 2026

Breaking the ‘Intelligence Ceiling’: How Gruve and Fleak Achieved Tier 3 Threat Detection Fidelity

Standard pipelines waste 40% on "logic grinding." The Gruve + Fleak architecture ends the janitor work. With Fleak’s Normalization Layer, raw logs from Zscaler and Okta become actionable intelligence in the Gruve Command Center.

Jan 19, 2026

The Observability Interpretation Layer: Decoupling Routing from Intelligence

Stop paying the '3 AM Tax.' When AWS ALB, Java, and Postgres dialects clash, Trace IDs vanish. Read the engineering blueprint for decoupling ingestion from compute and enforcing OpenTelemetry schemas in flight.

Jan 8, 2026

The First Mile Gap: Why Your Autonomous Systems Know Everything but Understand Nothing

90% of AI agent projects fail within 30 days. Transition from Systems of Record to Systems of Context. Ensure 2026 success by ending manual mapping for a self-healing foundation.