Stop paying the '3 AM Tax.' When AWS ALB, Java, and Postgres dialects clash, Trace IDs vanish. Read the engineering blueprint for decoupling ingestion from compute and enforcing OpenTelemetry schemas in flight.

By

Bo Lei

Co-Founder & CTO, Fleak

The Observability Interpretation Layer: Decoupling Routing from Intelligence

Distributed tracing depends entirely on a consistent schema. If your Load Balancer, Application, and Database don't agree on how to format a Trace ID, you have three isolated piles of text instead of a “trace”.

This is the default state for most infrastructure. AWS ALBs emit space-delimited text with versioned IDs. Java applications throw multi-line stack traces. PostgreSQL writes to Syslog. When a request hits this stack and fails, the engineer on call has to manually grep and correlate timestamps because the tools can't link the events automatically.

We can solve this by normalizing data in motion. Instead of dumping raw text into storage and hoping for the best, we can transform these messy sources into the OpenTelemetry (OTEL) standard within the pipeline itself.

The Problem: Data Fragmentation

To demonstrate the challenge, we created a synthetic dataset representing a cascading failure in a checkout API. In this scenario, a connection leak in the application triggers a distinct chain reaction: the database refuses connections (logging FATAL errors), causing application threads to block (logging 504 timeouts), which eventually exhausts the heap (logging OutOfMemoryError).

1. The Suspect (AWS ALB) The investigation begins with a 504 error on the load balancer. The ALB provides a Trace ID with Root= prefix, which becomes our primary lead:

2. The Dead End (Java Application) Then we hit the Java Application layer. Here, the logs switch to a standard UUID format inside brackets, completely mismatching the ALB ID "Root=1-67891233-a0b1c2d3e4f5678901234567". The Java app stripped the AWS-specific "Root" prefix, logging only the internal ID. The link is broken instantly:

3. The Silent Killer (PostgreSQL) And way down in the PostgreSQL database, we lose the trace entirely. It is logging in a completely different format, Syslog, relying only on timestamps to tell us what went wrong:

We have trace IDs in the first two logs, but the formats don't match (the ALB adds that annoying Root= prefix). The database is even worse—it has no trace ID at all, so we're stuck guessing based on timestamps. In a production incident, reconciling these differences requires manual correlation or complex query logic, significantly increasing Mean Time To Resolution (MTTR).

The Architecture: Decoupling Routing from Processing

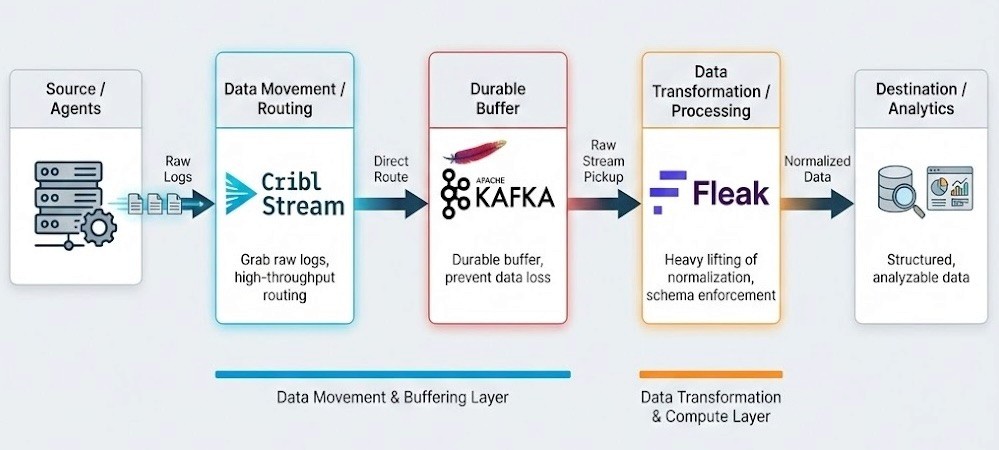

A production-ready observability pipeline separates data movement from data transformation. For this implementation, we built a pipeline that separates these concerns.

We use Cribl Stream to grab the raw logs off the agents and route them directly into Kafka. This gives us a durable buffer so we don't lose data if the downstream processing layer chokes or needs to be paused. From there, Fleak picks up the raw stream to handle the heavy lifting of normalization.

Architect's Note: Why separate 'Routing' (Cribl) from 'Compute' (Fleak)? You might look at this stack and ask: "Cribl can parse JSON. Why are we chaining two engines?"

It comes down to avoiding Head-of-Line Blocking.

We treat Cribl Stream as the "Logistics Layer." Its primary job is to get data off the edge and into the buffer (Kafka) as fast as possible. If we load the edge nodes with CPU-intensive tasks—like recursive OTEL schema mapping or AI-based inference—we risk creating back pressure that slows down raw ingestion.

We treat Fleak as the "Compute Layer." Normalizing a messy stack trace into OTEL is legally "compute-bound" work. By isolating this logic after the Kafka buffer, we ensure that complex transformations never block the raw data flow.

Implementation: Normalizing to OpenTelemetry

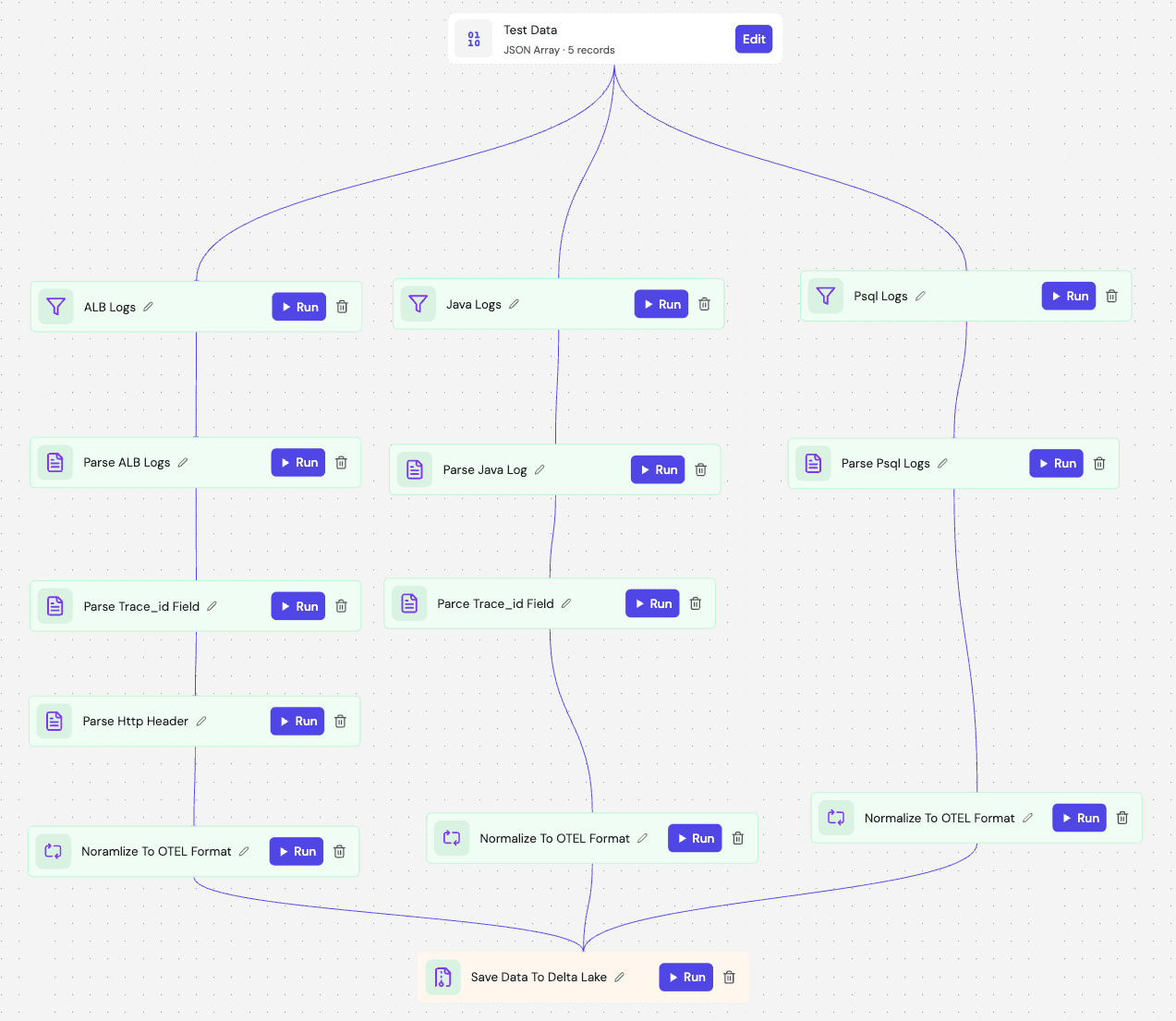

With the architecture set, the implementation becomes purely about logic. We use Fleak's visual DAG builder to automate the three critical steps required to unify the data: branching, parsing, and semantic mapping.

1. Branching and Parsing with Zero Code

The first challenge is separating the mixed stream. In the DAG, we use Filter Nodes with simple Regex expressions to identify the source type—branching h2 logs to the ALB branch and stack traces to the Java branch.

Once branched, we don't need to write custom parsers. We simply drop in a Parser Node.

For the ALB, we select a

delimited_textconfiguration.For the Java Stack Trace, we use a standard Grok pattern (

%{LOGLEVEL:log_level}...).

2. The Transformation Logic (Low-Code Eval)

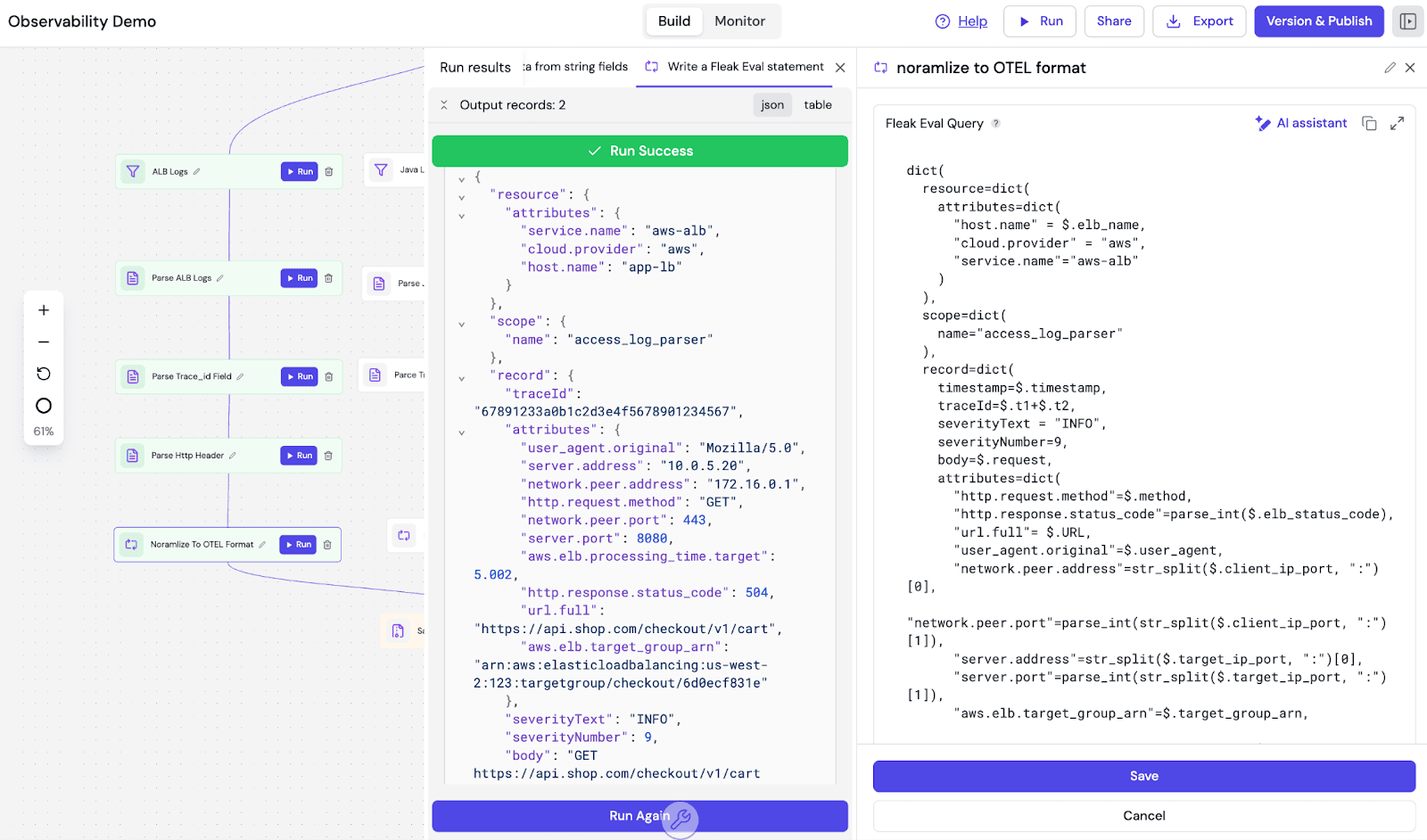

This is where the actual normalization happens. We use Eval Nodes to transform the proprietary fields into the OTEL schema. Fleak uses a Python-like dictionary structure for this, which allows us to define the output schema declaratively.

Here is the actual configuration used to map the ALB logs. Notice how we handle the Trace ID (t1=67891233, t2=a0b1c2d3e4f5678901234567) normalization ($.t1+$.t2):

This declarative approach is significantly faster than writing and testing custom transformation scripts. We define the target schema (OTEL), map the incoming fields, and Fleak handles the execution optimization.

3. Enforcing the Contract

Finally, the pipeline terminates in a Delta Lake Sink. Unlike a standard S3 dump, we enforce an Avro Schema at the sink level. This guarantees that every record landing in our data lake complies with the NormalizedLogRecord contract. If a log fails validation, it is dead-lettered immediately, preventing data swamp corruption.

Engineering Trade-offs

Moving normalization into the pipeline isn't free. It adds compute overhead and creates a stricter dependency on your ingestion infrastructure. If the pipeline backs up, your logs are delayed.

However, the alternative is paying that tax during an incident. When we keep logs in their raw, vendor-specific formats, we force every engineer to become a parsing expert at 3 AM.

In our simulation, normalizing the Trace IDs didn't magically fix the memory leak. But it did allow us to skip the twenty minutes of "grep-and-hope" that usually precedes the actual diagnosis. It turned a data formatting problem back into a regular engineering problem.

Other Posts

Jan 19, 2026

The Observability Interpretation Layer: Decoupling Routing from Intelligence

Stop paying the '3 AM Tax.' When AWS ALB, Java, and Postgres dialects clash, Trace IDs vanish. Read the engineering blueprint for decoupling ingestion from compute and enforcing OpenTelemetry schemas in flight.

Jan 8, 2026

The First Mile Gap: Why Your Autonomous Systems Know Everything but Understand Nothing

90% of AI agent projects fail within 30 days. Transition from Systems of Record to Systems of Context. Ensure 2026 success by ending manual mapping for a self-healing foundation.

Jan 7, 2026

An Engineering Approach to OCSF Log Normalization

Ingestion isn't the bottleneck; normalization is. We explain how to decouple logic from runtime, transforming chaotic Cisco and Linux logs into clean OCSF data. End the regex nightmare and focus on detection.