Fleak enables efficient Retrieval-Augmented Generation (RAG) by integrating Pinecone vector database retrieval with LLM-based answer generation, suitable for various information-rich applications.

Try this Template

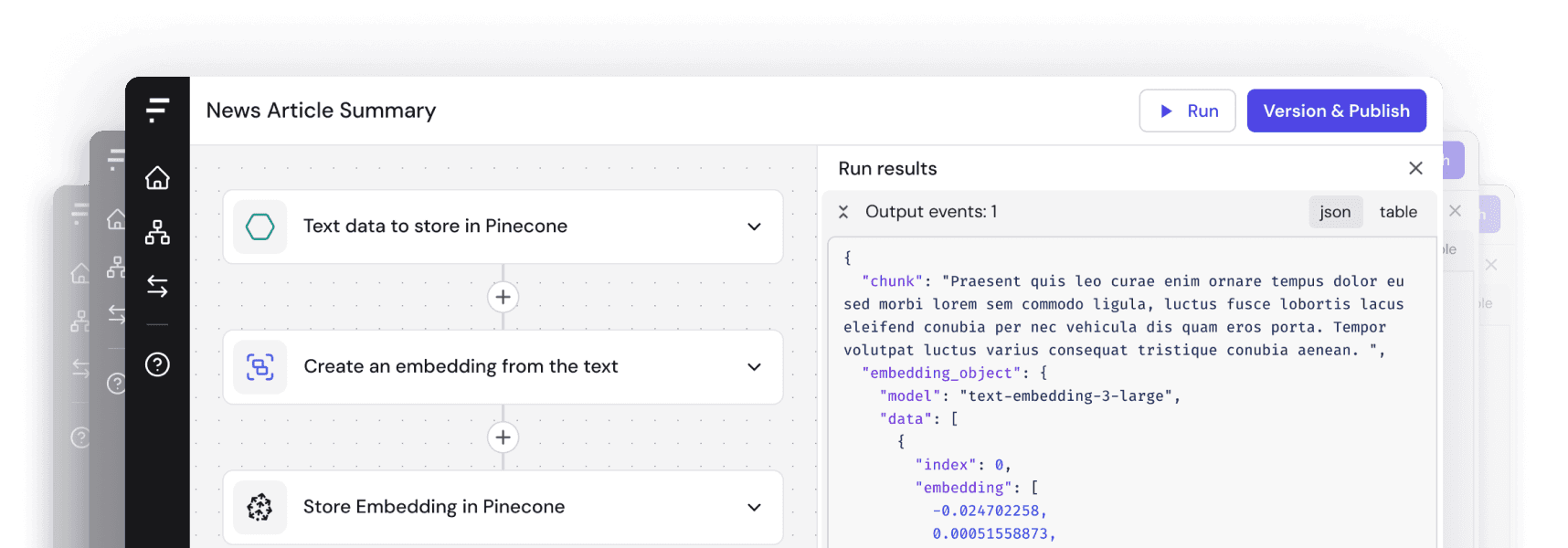

Retrieval-Augmented Generation (RAG) allows you to efficiently answer complex questions by combining the strengths of information retrieval and generative models. With Fleak, you can quickly set up a workflow that extracts relevant information on-demand from the Pinecone vector database and generates precise answers using a Large Language Model (LLM). Whether you’re dealing with customer queries, technical documentation, or any data-rich environment, Fleak’s integration with Pinecone ensures that your retrieval processes are both scalable and accurate, providing reliable insights across various domains..

How to use the template