Social media platforms have a lot of user data. This data shows what people like and how they behave, which is really useful for businesses, researchers, and developers.

By

Nicolas Hillison

Data Scientist, Fleak

This helps them understand their audiences better and make smart decisions. But sorting through all this information can be hard. That's why tools for data analysis and summarization are important—they help find the useful stuff quickly.

In this blog post, we'll show you how to make an easy and effective app using Fleak to connect to the Reddit API. We'll explain how this tool can summarize a user's profile, making it simple to get clear and useful insights.

Data Enrichment Process: Making Your Data Smarter and More Useful

Imagine you've got a plain cake, and you want to turn it into a delicious, layered masterpiece. Data enrichment is like that cake transformation. You start with something basic, then add lots of yummy layers to make it amazing. This process makes your data smarter and way more useful. Now, let's break it down into four easy steps: getting your raw data, adding extra details, digging for valuable insights, and then showing off the improved data.

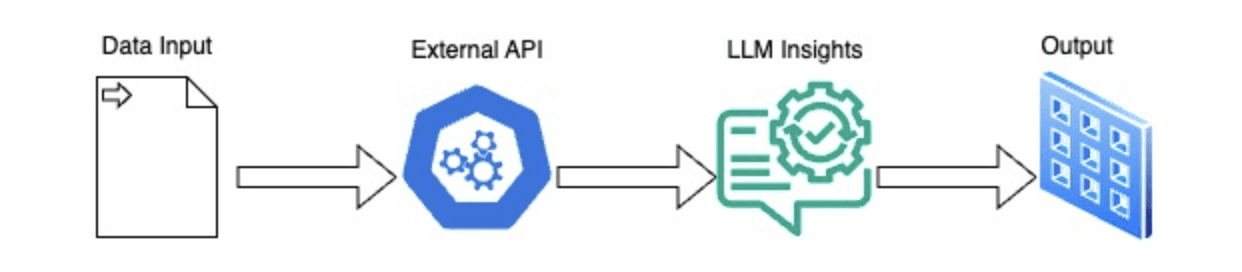

Step 1: Input data event

First up, we need the raw data. Think of this as the starting point—basic information that needs some extra flavor. This raw data can come from all sorts of places like customer forms, transaction records, or sensors. In the image, the first icon (a document with an arrow) represents this initial data coming in. It's the foundation that we'll build on.

Step 2: Calling an external API to enrich the data

Next, we make our raw data more interesting by calling an external API. An API (Application Programming Interface) is like a bridge that connects your data to other sources of information. For example, you might pull in demographic info, location details, or financial data. The second icon (a hexagon with connecting gears) shows this step, where we add valuable extra details to our basic data, turning it into something much richer.

Step 3: Extracting Insights from an LLM

Now that our data is enriched, it's time to dig deeper and find the important stuff using a Large Language Model (LLM). LLMs are smart tools that can understand and analyze natural language, helping us find trends, sentiments, and predictions in our data. The third icon (a chat bubble with a gear) represents this step of analyzing the enriched data to pull out useful insights.

Step 4: Enriched Data Output

Finally, we produce the finished product: the newly enriched and analyzed data. This can be in the form of reports, database entries, or even direct feeds into other systems. The last icon (a grid or table) signifies this final step of outputting the improved data, ready for you to use in making decisions or crafting strategies.

Harnessing Reddit Data with Fleak: A Practical Example

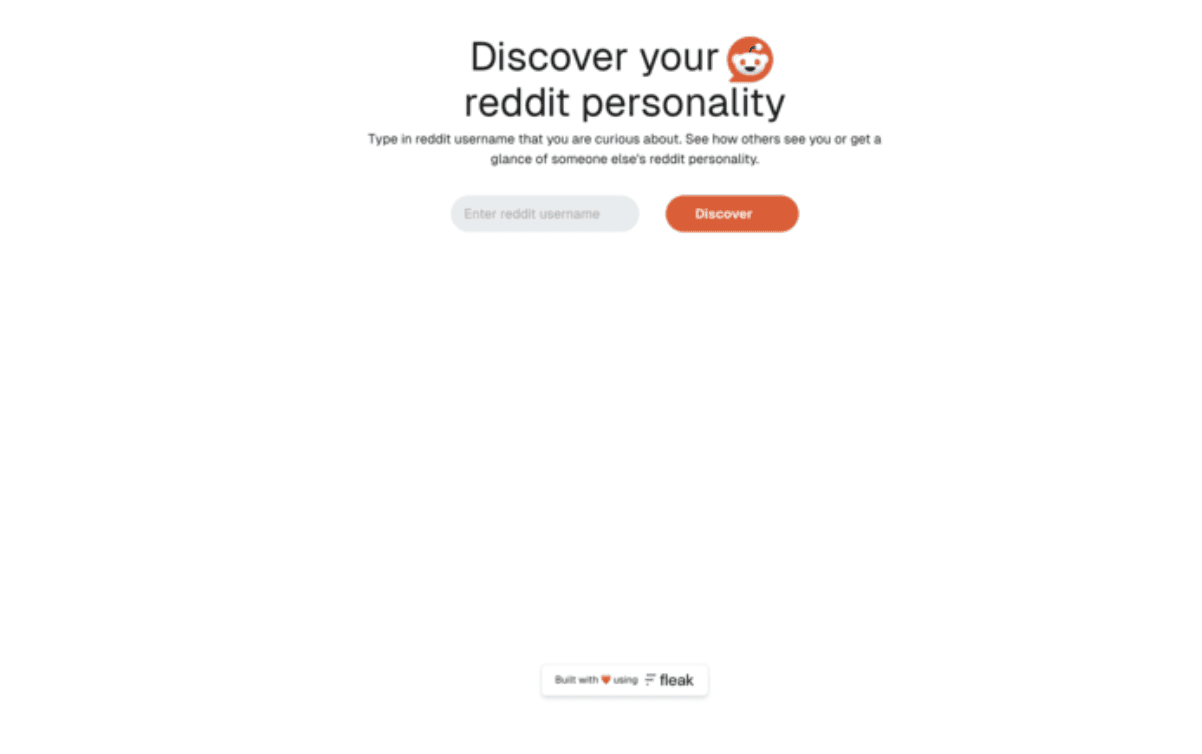

To demonstrate how easy it is to use the Reddit API with Fleak, we've developed a simple yet fun web app that shows reddit users’ personalities based on their activity. Feel free to play around with the app at reddit.fleak.ai.

The web app features a single input field for Reddit username, and a button. Once the button is clicked, it calls a Fleak workflow where all the magic happens. The workflow uses AWS Lambda to authenticate with Reddit and fetch user’s data. The following nodes in the workflow transform the data to a proper format to be fed into the LLM prompt. We ask in the prompt to generate a short description of the user's character and output it in a markdown format.

Let’s walk through essential steps on how it was built so one could recreate it or build something similar.

Web Application as User Interface

In our example, we use a simple Next.js web app, built from the create-next-app template, to interact with the workflow. You can check out the entire source code here. The app is straightforward, with just one input field and a button:

Once user clicks the “Discover” button, the username is sent to the Fleak Workflow:

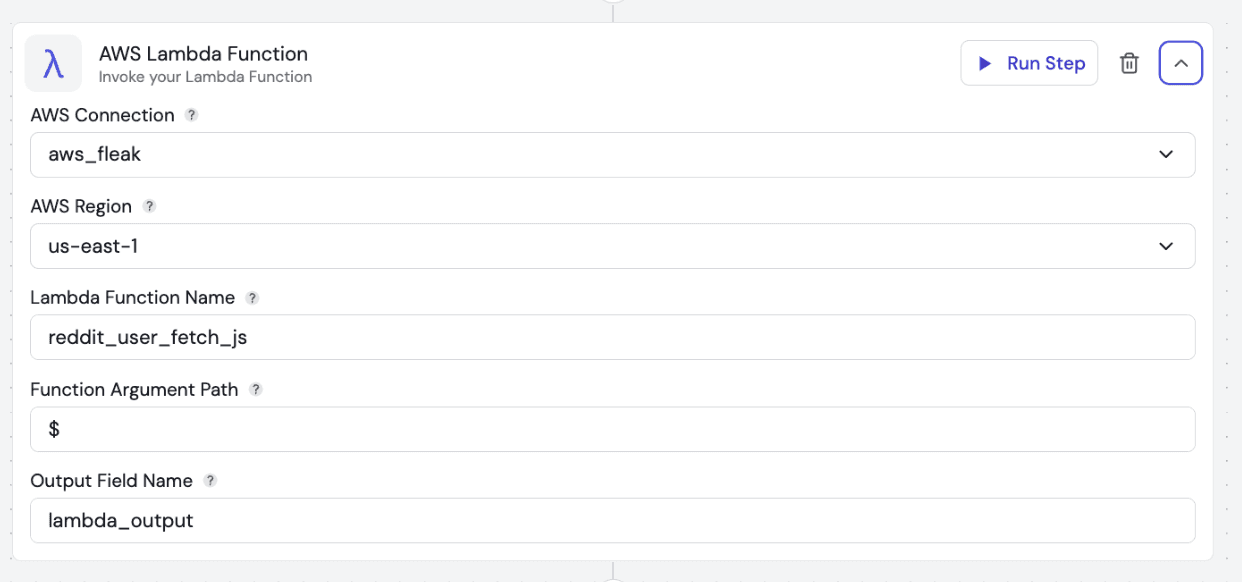

Step 1: AWS Lambda function

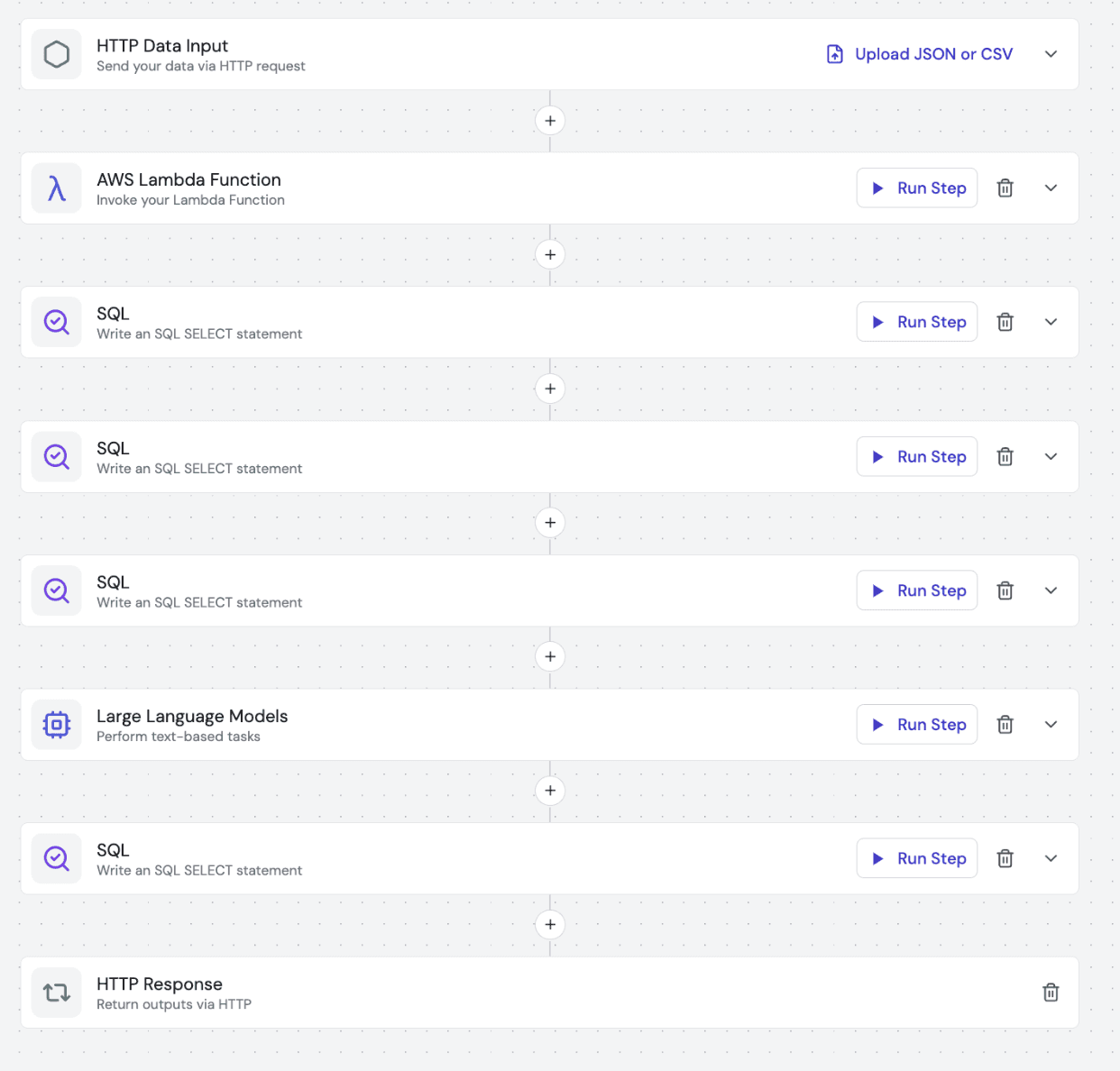

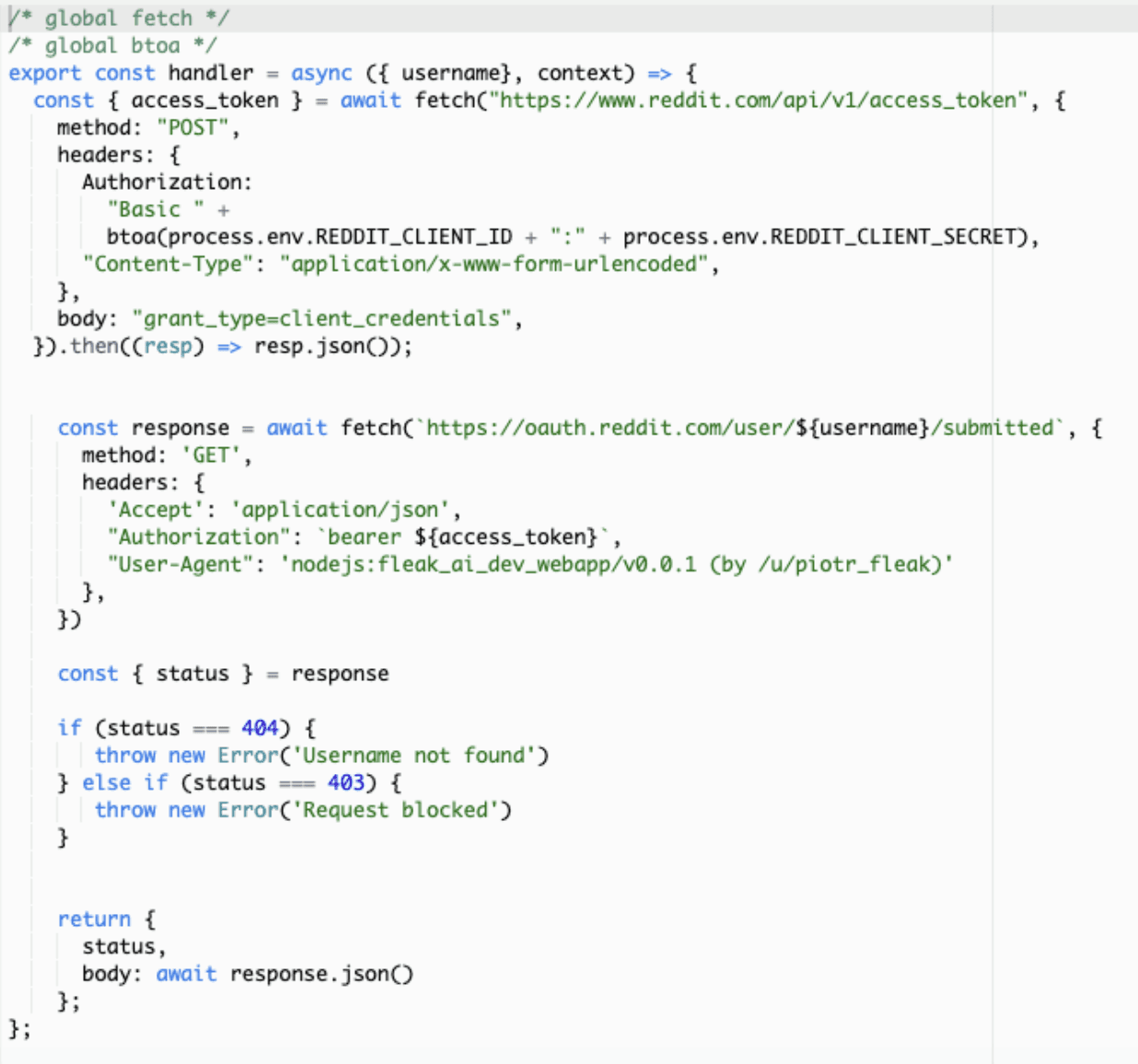

The first step in the workflow is to call the AWS Lambda function with the username provided by the web app. This function authenticates with Reddit and retrieves the user’s past posts and comments through the Reddit API. Doing this in real time ensures that we always get up-to-date information.

Before connecting it to the workflow, we first need to head over to AWS and create a Node.js Lambda function, then add the necessary code which can be found here:

Once the Lambda function is set up in AWS, the next step is to build the workflow in Fleak. We start by adding an AWS Lambda Function node that will call the function we just created.

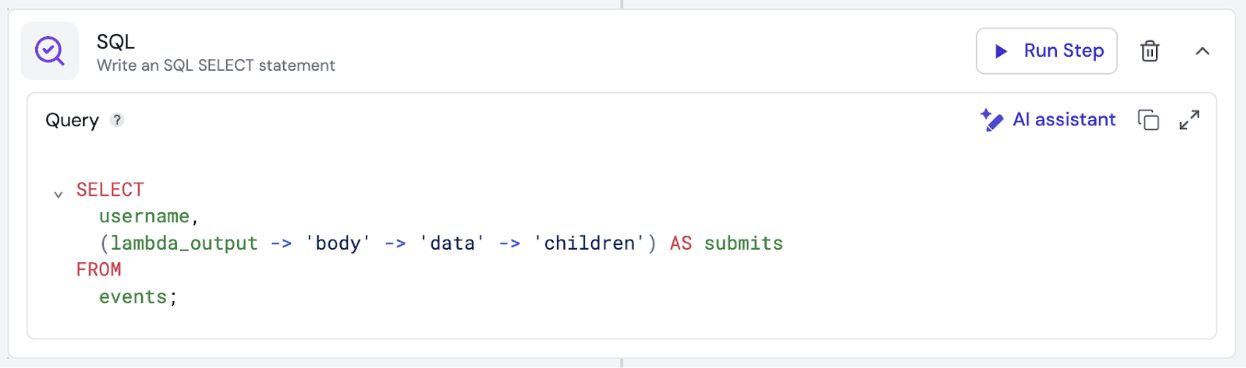

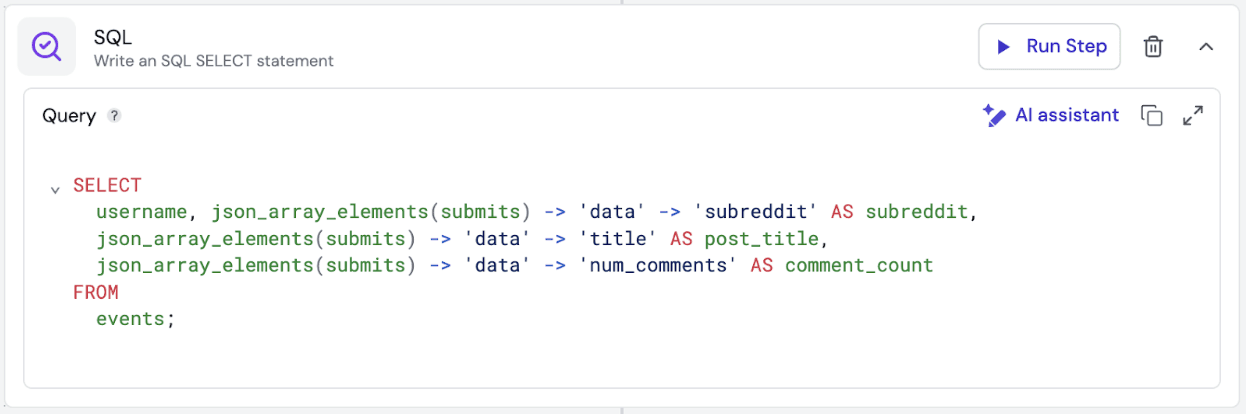

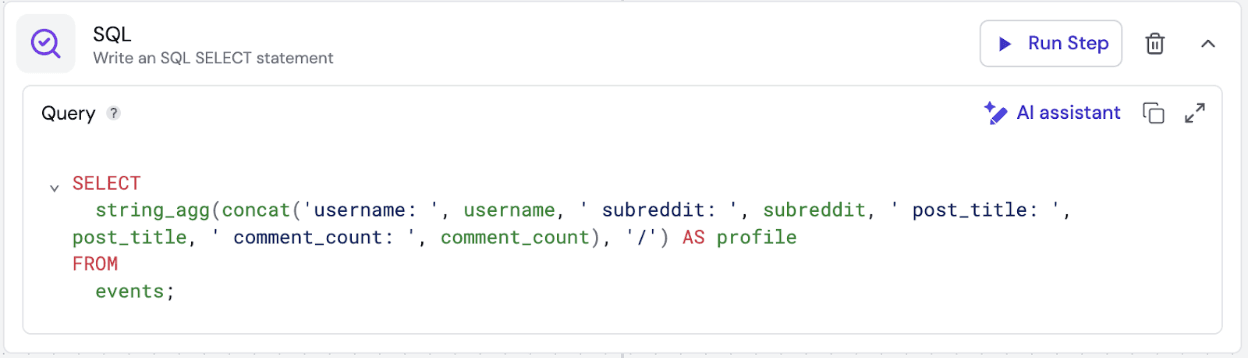

Step 2: SQL transformations

The data we receive from Reddit has its own structure, which doesn’t directly fit into the LLM prompt. Luckily, we can easily apply a few SQL transformations to format it correctly. With Fleak, we can develop these transformations interactively, running each step individually and viewing the results in the side panel as we go.

First, we grab the list of posts for each user:

Next, we extract the key details from each post, such as the subreddit name, post title, and the number of comments. This gives us a clearer view of the most important information for each post.

Finally, we combine all that information into a single text value for each user:

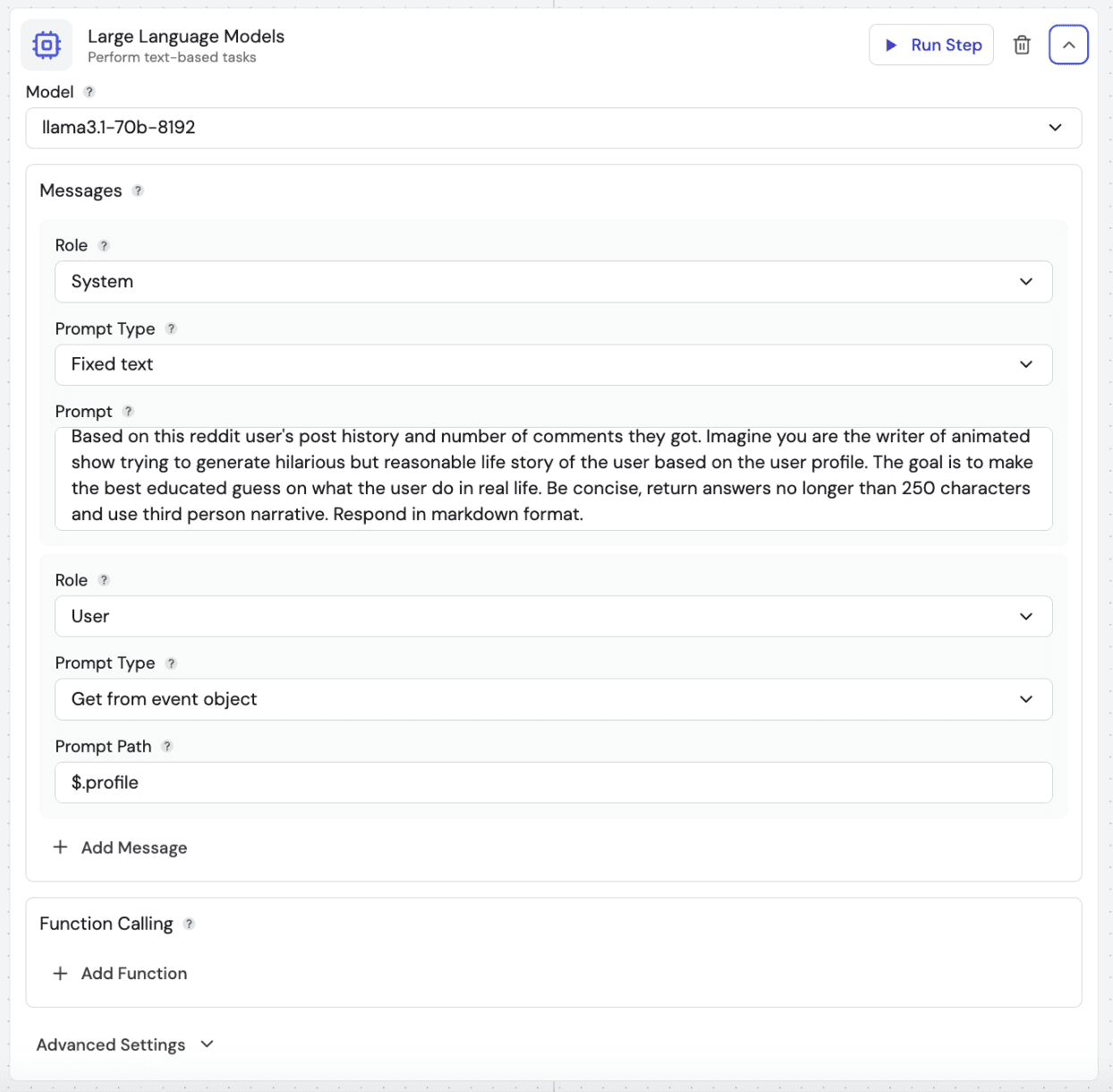

Step 3: LLM inference

Now that the data is prepared, we're ready to pass it into an LLM (Large Language Model) node. In the prompt, we ask the LLM to generate a brief yet meaningful life story of the user based on the information we've gathered from the previous steps.

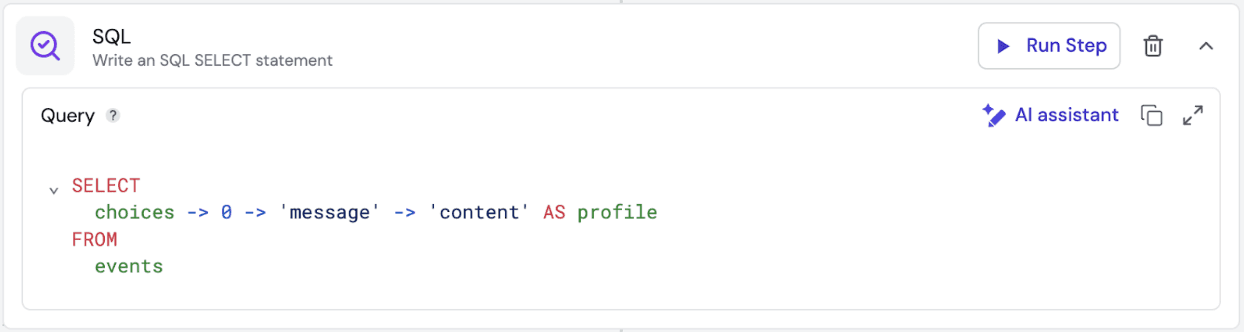

Finally, we add an SQL node to extract the content generated by the LLM. This step retrieves the main text or response created by the LLM from the events data and gets it ready for the final output.

Step 4: Publishing the Workflow as an API

Once the workflow is set up, publishing it as an API in Fleak is super easy—just one click, and it's live as a production-ready API. From there, you can access it through any HTTP client, making it simple to integrate with your existing apps and services.

Magnify your Workflows with Fleak's API Integration

At Fleak, we simplify the process of combining tools like AWS Lambda, Large Language Models (LLMs), and SQL data transformations into a single, streamlined workflow. Instead of juggling these components individually, Fleak consolidates everything into one API, making it easier to build advanced data transformations with minimal development time and operational complexity. You can check out our documentation to see how to set up an AWS Lambda function from scratch.

If you're interested in tailoring this setup for your specific needs, you can always book a demo on Fleak.ai—we'd be happy to walk you through it!

Other Posts

Jun 15, 2025

OCSF to S3: Streaming with Kinesis, Firehose, and Zephflow

In Part 2, we build the final stage of our pipeline. Learn to stream OCSF logs to S3 as Parquet using Kinesis Firehose, a Glue schema, and a Zephflow sink, making your data ready for large-scale analysis.

Jun 13, 2025

From VPC Logs to OCSF: A Streaming Pipeline with Kinesis and Zephflow

In part one of our series with Cardinal, learn to transform AWS VPC logs into the query-ready OCSF format. We'll build a streaming data pipeline using Fleak's OCSF Mapper, Zephflow, and Kinesis.

Jun 9, 2025

A Practical Guide to Building Real-Time Log Parsing Pipelines

Tired of fragile log parsing? Learn to build a robust, real-time pipeline. This guide covers architecture, tools, and scalable strategies to handle complex logs and avoid late-night alerts for good.