Tired of fragile log parsing? Learn to build a robust, real-time pipeline. This guide covers architecture, tools, and scalable strategies to handle complex logs and avoid late-night alerts for good.

By

Bo Lei

Co-Founder & CTO, Fleak

If you've ever been tasked with building a real-time log parsing pipeline, you're likely familiar with the challenges. What starts as a straightforward goal—parse logs and send them to a downstream system—can quickly turn into complex regex patterns, late-night alerts for parsing failures, and explaining stale dashboard data to stakeholders.

After building pipelines for application logs and IoT sensor data, here are some practical lessons to create a system that can reliably handle a diverse and unpredictable mix of log formats. Here's what I've learned.

The Hidden Complexities of Log Parsing

The main challenge is handling different data sources. In a typical environment, you might have logs coming from:

Application servers writing custom JSON.

Legacy systems that generate fixed-width text files.

Third-party services sending data via CSV webhooks.

The occasional service that uses XML.

Each format comes with its own set of rules and inconsistencies. Timestamps might be in UTC or a local time zone. Delimiters can be tabs, pipes, or commas. Some systems truncate long fields, while others wrap them across multiple lines. Your task is to transform this disparate collection of data into a clean, standardized format that downstream systems can effectively use.

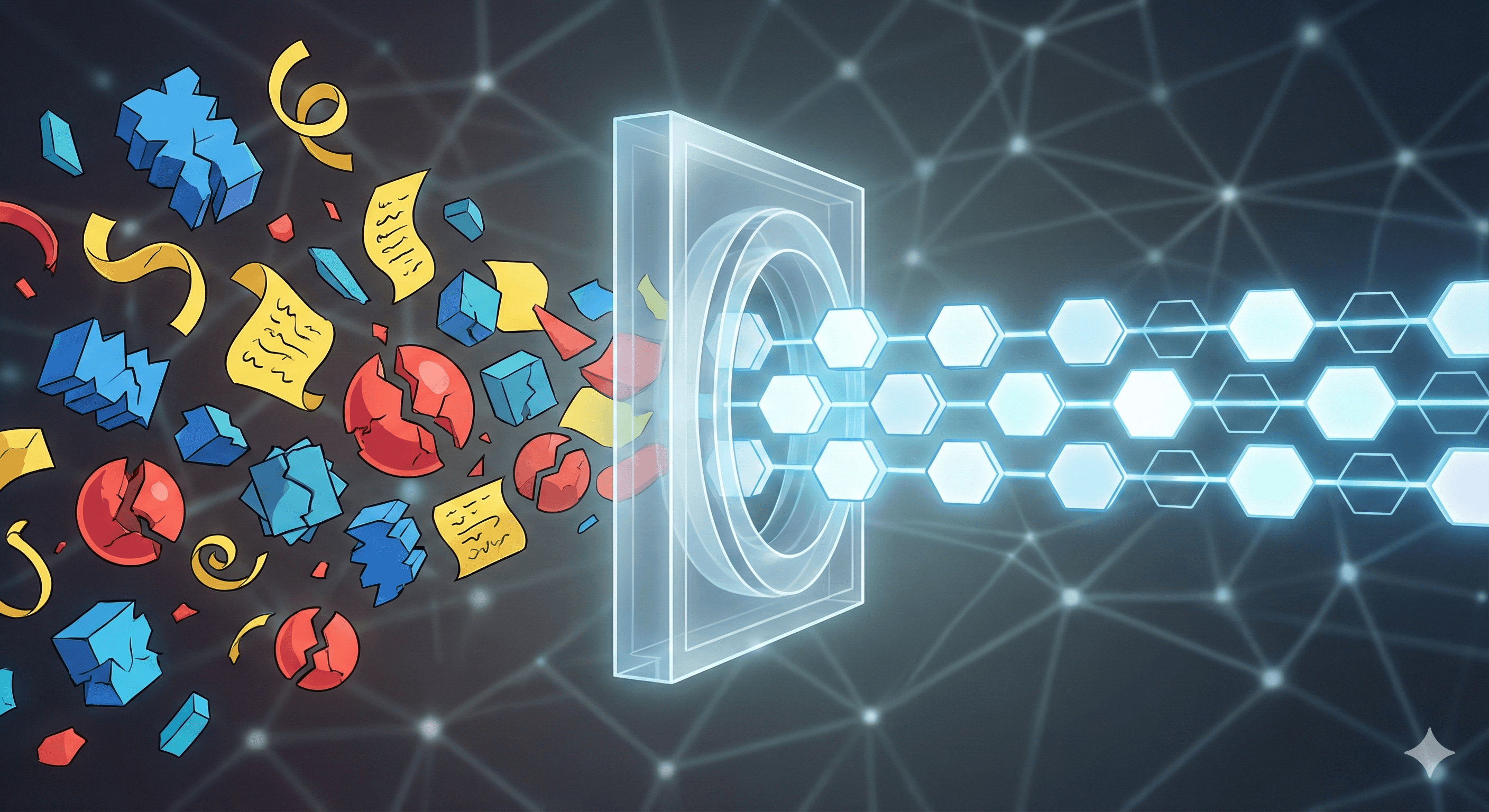

An Architecture for Stability and Resilience

One of the most common mistakes is attempting to parse logs synchronously as they are ingested. This approach is fragile and often leads to data loss and operational headaches.

A more robust solution is to place a message queue between the ingestion and parsing stages. Apache Kafka is a popular choice for this, but managed services like AWS Kinesis or Google Cloud Pub/Sub can reduce operational overhead.

The workflow looks like this:

Ingestion: Raw logs are written to Kafka topics as quickly as they arrive.

Processing: Stream processing applications consume the raw logs from Kafka and perform the parsing.

Output: The parsed, structured data is written to storage systems like Elasticsearch, Amazon S3, or Google BigQuery.

This creates a buffer. If log volume suddenly increases—perhaps due to a production service switching to debug-level logging—the incoming data queues up in Kafka instead of being dropped, giving your parsing system time to catch up.

Choosing the Right Tool for the Job

The stream processing ecosystem offers several types of tools for building these pipelines. Each has trade-offs to weigh based on your team's expertise and project requirements.

Traditional Programming Frameworks

Frameworks like Apache Flink and Spark Streaming allow you to write your parsing logic in general-purpose languages such as Java, Scala, or Python. This gives you maximum control to implement any custom logic you might need, but it also means you are responsible for developing and maintaining a distributed application.

This approach is powerful for complex, multi-stage processing but can be more than is necessary for pipelines focused primarily on parsing and routing.

SQL-Based Processing

Tools such as ksqlDB and Flink SQL allow you to define data transformations using SQL queries.

Since SQL is familiar to many developers and data analysts, these tools can be very accessible. However, SQL was not originally designed for intricate string manipulation or conditional parsing logic. As requirements become more complex, such as handling nested data or applying different rules based on log content, you may encounter the limitations of a SQL-based approach.

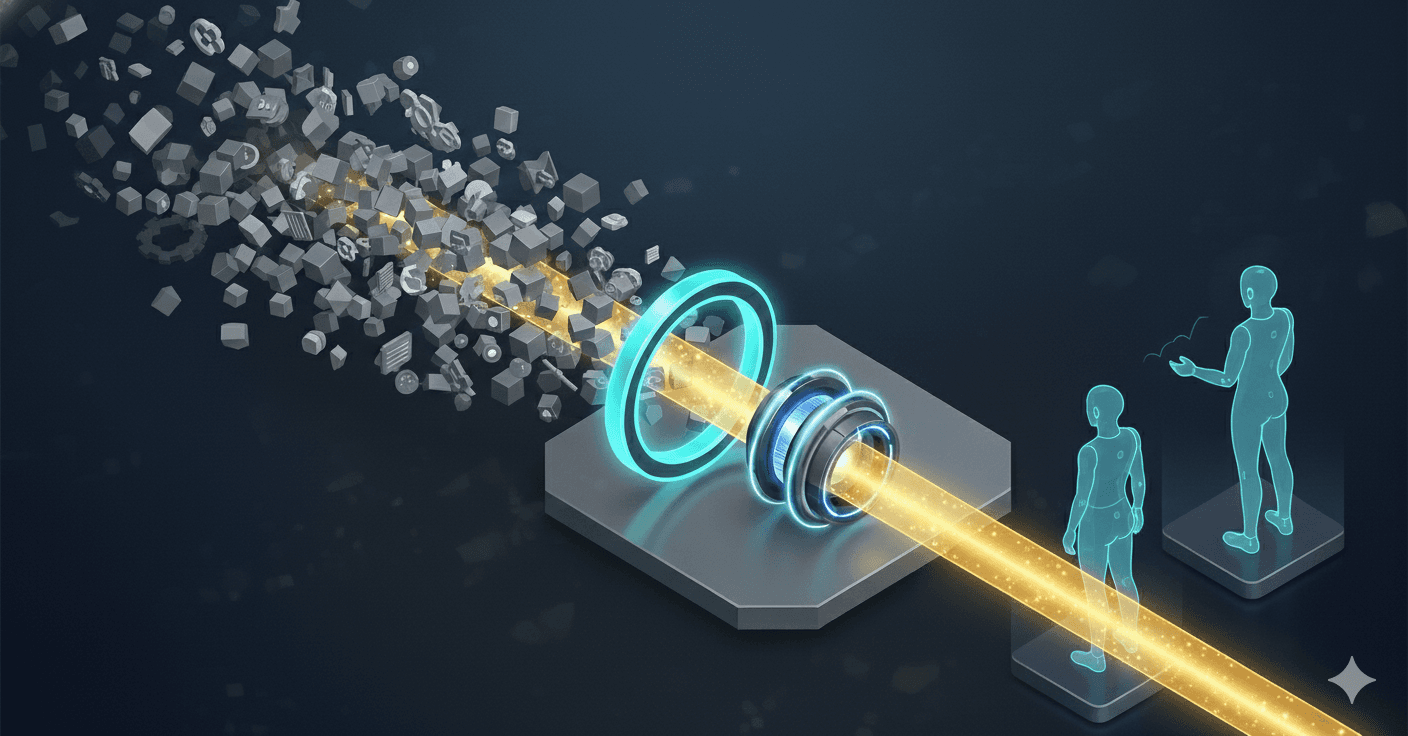

Configuration-Driven Tools

A third category of tools is purpose-built for data transformation. Instead of expressing logic in a general-purpose programming language or SQL, these tools use declarative configurations, often combined with a specialized expression language.

For instance, a lightweight stream processor like ZephFlow uses a Directed Acyclic Graph (DAG) to define the data flow, where each step is configured with a concise expression.

These tools handle common tasks like parsing, filtering, and routing data, without the operational complexity of managing a full distributed application.

Scalable Parsing Strategies

To build a lasting pipeline, consider strategies that account for future growth, changing requirements, and inconsistent production data.

Start Simple and Enhance Progressively

The most maintainable parsing pipelines often start with a simple goal and evolve over time. Instead of trying to parse every field from every log source on day one, begin by extracting only the essentials. A timestamp and a message are often enough to provide immediate value. For an Apache access log, you might start with just the timestamp, status code, and requested URL, which can address a majority of common debugging needs.

Once the pipeline is stable, you can incrementally add more detailed structured parsing, extracting fields like user IDs or request IDs. The next phase is often enrichment, where you add derived data such as response time calculations or geo-IP lookups. It's good practice to design these enrichment steps as independent stages that can fail gracefully. If a geo-IP service is unavailable, the log should still proceed through the pipeline, perhaps with a field indicating the lookup failed, rather than dropping the entire log.

Finally, a normalization step ensures that all your logs adhere to a consistent schema. This might involve converting all timestamps to UTC or standardizing field names across different sources.

Externalize Your Parsing Rules

Embedding parsing logic directly into your application code can create significant maintenance challenges. Every time a log format is modified, the entire pipeline may need to be redeployed.

A better approach is to externalize this logic.In a configuration-driven tool like ZephFlow, the entire pipeline definition—including the DAG structure and all parsing rules—is defined declaratively and is separate from the execution engine.

The benefit of this approach is that the pipeline's behavior lives in configuration files, not compiled code. When a log format changes, you can update the configuration and reload it, often without restarting the underlying engine. This separation of the engine from the behavior makes the pipeline much easier to manage and update.

Handle Multi-Format Logs

A single pipeline often needs to process multiple log formats. You can accomplish this by building a routing layer that directs different log types to the correct parsers. This is often done by inspecting the raw log for a pattern unique to a specific format.

Here’s an example using a ZephFlow DAG to route NGINX and JSON logs to different parsers before merging them into a normalized structure:

In this design, initial filter nodes check for format-specific patterns. NGINX logs are identified by the - - [ pattern, while JSON logs are identified by curly braces. After parsing, all logs are sent to a final normalization step to ensure a consistent output schema. When a new log format needs to be supported, you can add a new detection filter and a corresponding parser branch without modifying the existing logic.

Design Your Target Schema First

Before you write any parsing code, define the ideal structure of your output data. Define your schema first - it keeps logs consistent and tells consumers what to expect.

Once the schema is defined, consider the serialization format. While JSON is easy to work with, binary formats like Apache Avro or Protocol Buffers are more efficient at scale, offering smaller data sizes and faster serialization. They also provide support for schema evolution. For example, with Avro, you can add new fields with default values to your schema without breaking older consumers.

However, these formats add complexity, requiring schema registries and specialized tools. Many teams choose to use JSON for its simplicity, accepting the performance trade-offs. Choose based on your volume, latency needs, and team capacity.

Error Handling: Plan for Imperfection

Production logs are notoriously unpredictable. Design your pipeline to handle errors at each stage.

Dead-Letter Queues: A Critical Safety Net

A dead-letter queue (DLQ) is a destination for logs that cannot be parsed or processed correctly. Instead of causing the pipeline to crash or drop data, problematic messages are sent to the DLQ for later analysis.

A DLQ serves several important functions:

Early Warning: A sudden increase in messages to the DLQ can signal an upstream change or data corruption issue.

Debugging: The quarantined logs provide concrete examples of data that is breaking your parsers.

Audit Trail: It demonstrates that data isn't being lost, merely set aside for correction.

Recovery: After fixing a parser, you can often replay the messages from the DLQ to recover the data.

For a DLQ to be effective, the messages sent to it should be enriched with metadata, such as why the parsing failed, which parser was used, and a timestamp of the failure. This context helps with debugging.

Partial Parsing

When a log message cannot be fully parsed, it's often better to extract what you can rather than nothing at all. Even just pulling out a timestamp and preserving the raw message provides some value and ensures that data continues to flow while you work on improving the parser.

Version Your Parsers

Log formats will change over time. It's wise to build versioning into your pipeline from the beginning. This could involve running multiple versions of a parser in parallel during a transition period or routing logs to different parsers based on their timestamps.

Performance Considerations

At scale, small inefficiencies can have a large impact.

Profile Your Regex Patterns: A poorly written regular expression can be significantly slower than an optimized one. The difference between a greedy pattern (.*) and a more specific, non-backtracking pattern can be orders of magnitude. Always test your patterns against representative data.

Pre-Filter Where Possible: If you only need to process a subset of logs (e.g., those with ERROR severity), filter them out before they reach the expensive parsing stage. A simple string search is much faster than complex pattern matching and can dramatically reduce the load on your parsers.

Monitoring: Ensure Pipeline Health

A well-monitored pipeline provides visibility into its performance and is crucial for maintaining reliability.

Input and Output Rates: Track the volume of logs entering and leaving the pipeline, broken down by source. Sudden spikes or drops can indicate problems with upstream services.

Parse Success/Failure Rates: Monitor the percentage of logs that are parsed successfully, categorized by log type. A drop in the success rate often points to a new or changed log format.

Processing Latency: Measure the end-to-end time it takes for a log to move from generation to its final destination. High latency can indicate bottlenecks in your pipeline.

Error Categorization: Don't just count errors; categorize them. Understanding why parsing is failing (e.g., malformed timestamps, missing fields) helps you prioritize fixes.

A monitoring dashboard can show data flow, success rates, latency, and error patterns.

Common Pitfalls to Avoid

Over-Engineering Too Early: Start with a simple, direct solution. Unnecessary layers of abstraction for "future flexibility" can become a burden.

Ignoring Time Zones: Standardize on UTC for all timestamps. This will prevent a host of problems related to time zone conversions.

Neglecting Schema Evolution: Log formats will change. Design your system to accommodate these changes from the start.

Underestimating Operational Overhead: Every new tool in your stack requires monitoring, upgrades, and maintenance.

Striving for Perfection: Aim for a high level of parsing accuracy, but recognize that achieving 100% may not be practical or cost-effective. Often, 95% accuracy is sufficient.

Conclusion

While building log parsing pipelines is complex, a structured approach makes it manageable. Keep it as simple as possible while handling the inherent complexity.

Begin with a robust architecture that decouples ingestion from processing. Select tools that align with your team's skills and your system's scale. By implementing the pipeline in stages, continuously monitoring key metrics, and designing for potential failures, you can build a reliable system that makes your log data a valuable asset.

Other Posts

Feb 19, 2026

Breaking the ‘Intelligence Ceiling’: How Gruve and Fleak Achieved Tier 3 Threat Detection Fidelity

Standard pipelines waste 40% on "logic grinding." The Gruve + Fleak architecture ends the janitor work. With Fleak’s Normalization Layer, raw logs from Zscaler and Okta become actionable intelligence in the Gruve Command Center.

Jan 19, 2026

The Observability Interpretation Layer: Decoupling Routing from Intelligence

Stop paying the '3 AM Tax.' When AWS ALB, Java, and Postgres dialects clash, Trace IDs vanish. Read the engineering blueprint for decoupling ingestion from compute and enforcing OpenTelemetry schemas in flight.

Jan 8, 2026

The First Mile Gap: Why Your Autonomous Systems Know Everything but Understand Nothing

90% of AI agent projects fail within 30 days. Transition from Systems of Record to Systems of Context. Ensure 2026 success by ending manual mapping for a self-healing foundation.